Action Recognition Using Pose Estimation with TF-OpenPose

Action recognition using pose estimation is a computer vision task that involves identifying and classifying human actions based on analyzing the poses of the human body.

In this task, a deep learning model is trained to detect and track human body joints, such as the elbows, wrists, and knees, from video frames or images. Once the joints are located, the model estimates the pose of the person in the frame, which is a set of joint angles that define the configuration of the body.

Based on the pose, the model then identifies the action being performed by the person, such as walking, running, jumping, or dancing. The model can recognize various types of actions by analyzing the changes in the pose over time and classifying them using machine learning techniques.

Action recognition using pose estimation has a wide range of applications, including in sports analytics, human-robot interaction, and surveillance systems.

What are the Available algorithms for action recognition …?

There are various algorithms and techniques available for action recognition using pose estimation. Here are a few examples:

- OpenPose: OpenPose is an open-source library that uses deep neural networks to estimate human body poses in real-time. It can identify key body joints and their connections and has been used for action recognition in several studies.

- Pose-based Two-stream Convolutional Neural Networks: This approach uses two streams of information for action recognition. One stream processes optical flow information, while the other processes pose information. The two streams are then combined to classify the action.

- Spatial-Temporal Graph Convolutional Networks: This approach builds a graph representation of the human body, where the nodes represent the joints, and the edges represent the connections between the joints. It then uses graph convolutional networks to classify the action based on the spatial and temporal information in the graph.

- Recurrent Neural Networks: RNNs have also been used for action recognition using pose estimation. They take sequences of pose estimates as inputs and use a hidden state to capture the temporal dynamics of the poses. The RNN can then be trained to classify the action based on the sequence of poses.

- 3D Pose Estimation with Temporal Encoding: This approach estimates 3D human poses and uses temporal encoding to represent the pose sequences as a fixed-length feature vector. The feature vector is then used to classify the action using a fully connected neural network.

These are just a few examples of the algorithms and techniques used for action recognition using pose estimation. The choice of algorithm will depend on factors such as the complexity of the action to be recognized, the available data, and the specific requirements of the application.

Here will discussed TF -Open-Pose Technique in detail -

The OpenPose architecture uses a deep convolutional neural network (CNN) to perform multi-person keypoint detection and body pose estimation. The network takes an image as input and outputs a set of confidence maps and part affinity fields, which are used to locate the body keypoints and infer the pose.

The architecture consists of three main stages:

- Input processing: The input image is preprocessed to obtain a set of feature maps using a sequence of convolutional and pooling layers. This helps to extract low-level and high-level features from the input image.

- Keypoint detection: The feature maps are used to generate a set of confidence maps that correspond to the presence and location of body keypoints. Each confidence map represents the probability of a particular keypoint being present at a given location in the image.

- Part affinity field estimation: The feature maps are also used to generate part affinity fields that represent the spatial relationships between different body parts. Each part affinity field represents the probability of two body parts being connected at a given location in the image.

The confidence maps and part affinity fields are then used to estimate the body pose by linking the keypoints together using a greedy algorithm. This results in a set of body poses that can be further refined using post-processing techniques.

The OpenPose architecture is designed to handle multiple people in an image and is optimized for real-time performance on GPUs. It has been trained on large datasets and can generalize well to various applications, including action recognition, human-robot interaction, and sports analysis.

How to Implement Openpose for Action recognition

Below we are focus on example based approach to develop NN and loss function for open pose.

OpenPose is a complex multi-task neural network that involves several subnetworks and intermediate processing steps. As such, it is not practical to define the entire OpenPose model in a single code block. However, I can provide an example of how to define a single subnetwork in TensorFlow that is used in OpenPose.

In OpenPose, one of the subnetworks is responsible for predicting the heatmap for each keypoint. A heatmap is an image where each pixel indicates the likelihood that the corresponding keypoint is present in that location. The subnetwork that predicts the heatmap is a convolutional neural network (CNN) that takes an image as input and produces a heatmap as output.

Here is an example code to define the heatmap subnetwork in TensorFlow:

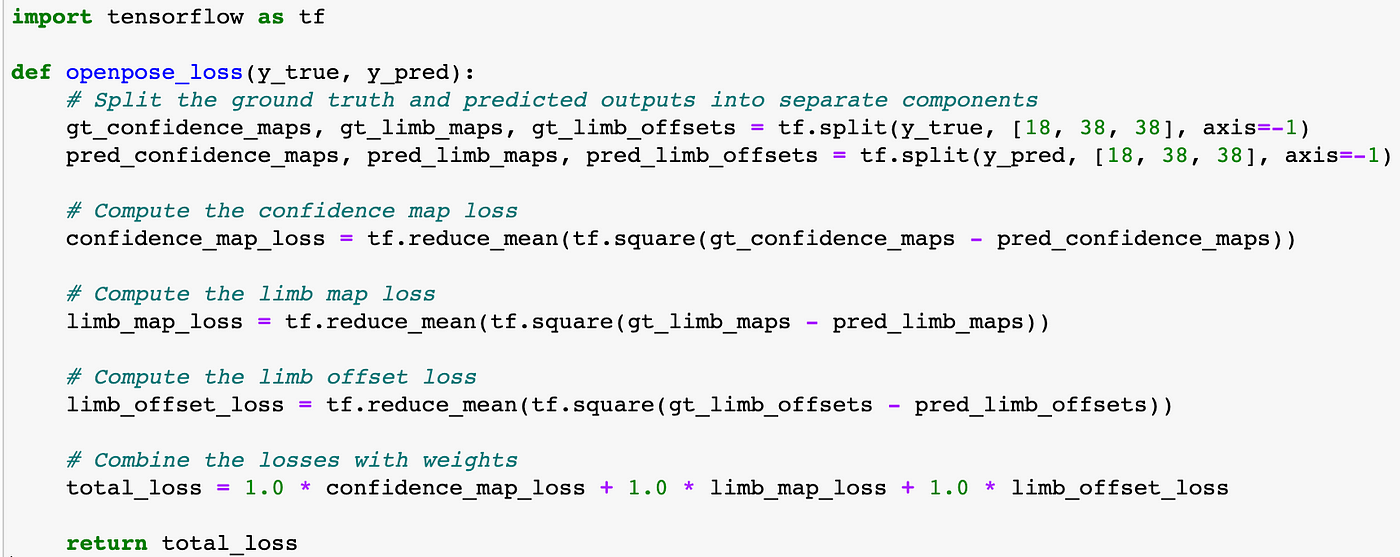

In OpenPose, the loss function is a multi-part loss that combines several components, including the keypoint confidence maps, the limb confidence maps, and the limb offset maps. The exact form of the loss function can vary depending on the specific architecture and training parameters.

Here is an example code to calculate a simplified loss function for OpenPose in TensorFlow, based on the Mean Squared Error (MSE) loss:

Using pre trained model -

It is not practical to create an OpenPose neural net from scratch. However, you can use the pre-trained OpenPose models available in various deep learning frameworks such as TensorFlow, PyTorch, and Caffe.

Here is an example of using the pre-trained OpenPose model in TensorFlow:

Below is the reference repo to test OpenPose in your dataset and also to train you own custom model-

Repo link — https://github.com/erShoeb/Action-recognition-with-Openpose/tree/main

My testing video-

Example of Other possible use cases using pose estimations

Action recognition using OpenPose has a wide range of applications in various fields. Here are some of the common use cases of action recognition with OpenPose:

- Sports Analysis: OpenPose can be used to analyze the movements of athletes and provide feedback on their form, technique, and strategy. For example, it can be used to track the movements of tennis players to analyze their serve or to track the movements of a soccer player to analyze their dribbling and passing skills.

2. Dance Analysis: OpenPose can be used to analyze dance movements and provide feedback on form and technique. It can be used to track the movements of dancers and provide feedback on posture, timing, and synchronization.

3. Security: OpenPose can be used in surveillance and security applications to identify and classify human actions, such as running, walking, or crawling. It can be used to monitor crowds and identify suspicious behavior, such as sudden movements or unusual gestures.

4. Gaming: OpenPose can be used to control video games with body movements. It can be used to detect and recognize body movements and translate them into in-game actions.

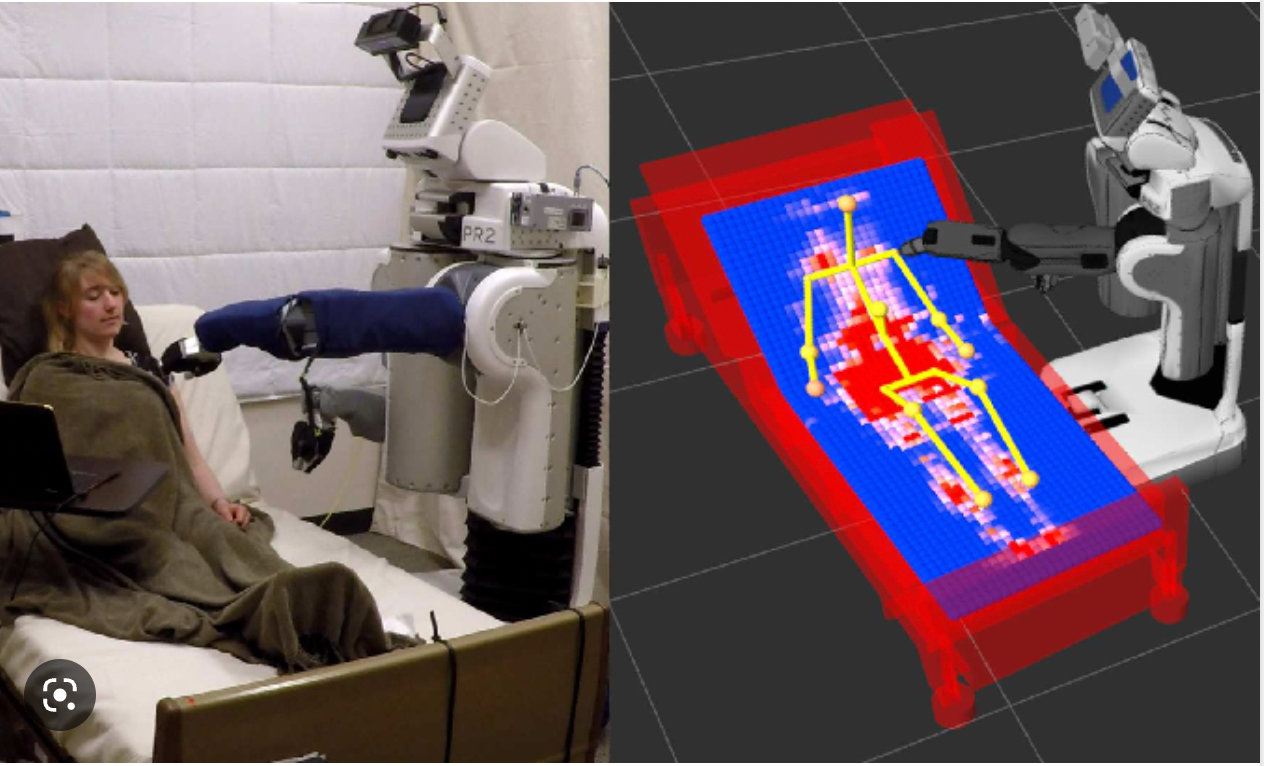

5. Robotics: OpenPose can be used in robotics to enable robots to interact with humans and perform actions based on human gestures and movements.

Overall, OpenPose’s action recognition capabilities have numerous applications in various industries, including sports, healthcare, entertainment, security, gaming, and robotics.